The promise of artificial intelligence in game development sounds like pure magic. Imagine typing a single command into Google’s Gemini 3.0 and watching a fully functional video game materialize before your eyes. No coding knowledge required, no months of development, no burnout from endless debugging sessions. Just one prompt, and boom—you’ve got a game.

I decided to test this reality. Armed with an ambitious Minecraft-inspired prompt, I unleashed Google’s latest AI marvel into the digital void. What I discovered was a masterclass in technological irony: while Gemini 3.0 demonstrated jaw-dropping capabilities in understanding my vision, the actual deliverable was a beautiful disaster.

The Hype vs. The Reality

When Google unveiled Gemini 3.0 Flash in late 2025, the tech community erupted with awe. Videos flooded YouTube showcasing developers creating functional websites, 3D games, and interactive applications from single prompts. The narrative was intoxicating—AI has finally arrived, and it’s here to democratize game development. Major tech creators demonstrated Gemini building everything from procedurally generated environments to complete Minecraft clones, all seemingly without a hitch.

But here’s what the glamorous demo videos don’t show you: what happens when you actually try to play the games it creates.

Why Gemini’s Game Generation Falls Apart

The core issue isn’t a lack of intelligence—it’s a fundamental architecture problem. Gemini 3.0 excels at understanding complex specifications and generating aesthetically impressive code structures. But game development requires more than architectural elegance. It demands consistency, reliability, and adherence to precise functional requirements.

When I fed Gemini my Minecraft prompt, it generated visually stunning code for a 3D voxel environment with procedural terrain generation. The environment looked incredible—chunked landscapes that seemed to stretch infinitely, with proper lighting and atmospheric effects. But the moment I tried to interact with it, everything collapsed.

Block placement didn’t work. The collision detection was completely broken. The inventory system, which appeared in the UI, didn’t actually function. The game looked like a Minecraft clone from the outside, but the moment you attempted to play it, the experience shattered like glass.

Read Also – The Hidden High-RPM YouTube/TikTok Niche Making Creators $10k Per Video

The System Prompt Catastrophe

One of the most damaging discoveries about Gemini 3.0’s gaming attempts is something developers have been loudly complaining about for weeks: instruction adherence failure. When I explicitly instructed the AI to implement specific game mechanics—breaking blocks, placing blocks, maintaining player state—Gemini either ignored these instructions or misinterpreted them entirely.

This isn’t operator error. Developers across forums and tech communities have reported identical issues with Gemini 3.0 Pro. The model seems to have regressed compared to its predecessor, Gemini 2.5 Pro. Users describe providing crystal-clear specifications only to watch Gemini either hallucinate features that don’t exist or completely overlook critical functionality.

One developer reported asking Gemini to edit a single line of code, only to watch the AI delete the entire rest of the project. Another described Gemini misunderstanding basic tasks and then doubling down on the misinterpretation across multiple turns.

The Hallucination Problem

As the conversation between me and Gemini progressed, something peculiar happened. The AI began hallucinating features that simply weren’t implemented. It would reference code sections that didn’t exist in the actual output. When I questioned these inconsistencies, Gemini confidently insisted it had included these elements, even providing “evidence” that wasn’t there.

This isn’t unique to my experience. Developers have documented a dramatic increase in hallucinations with Gemini 3.0 compared to previous versions. What made version 2.5 Pro reliable—its relatively low hallucination rate—has mysteriously disappeared. The model now seems to confabulate details about its own output, creating a confusing feedback loop where the AI appears confident but completely wrong. Vid6

The Performance Cliff

Here’s another pattern that emerged: Gemini’s quality deteriorates sharply after the initial output. The first iteration of code looks promising. But when you request modifications, fixes, or refinements, the model’s performance nosedives. Subsequent iterations introduce new bugs, sometimes fixing one problem while creating three others.

For game development, this is catastrophic. Games require iterative refinement. You discover bugs during play testing, request fixes, and iterate. With Gemini, each iteration is a gamble—you might get improvements, or you might get a more broken version.

Why This Matters for the Future

The failure of my Gemini Minecraft experiment isn’t a trivial technical hiccup. It represents a critical gap between marketing narratives and engineering reality. Game development is already being marketed as an AI-democratized field, attracting enthusiastic creators who assume the technology has matured beyond this stage.

But it hasn’t. What Gemini has created is a false positive—an AI that’s impressive enough to generate convincing-looking output, but not reliable enough to actually deliver functional products. For content creators and indie developers, this is particularly frustrating because the promise is so visible, yet the execution is so hollow.

Prompt

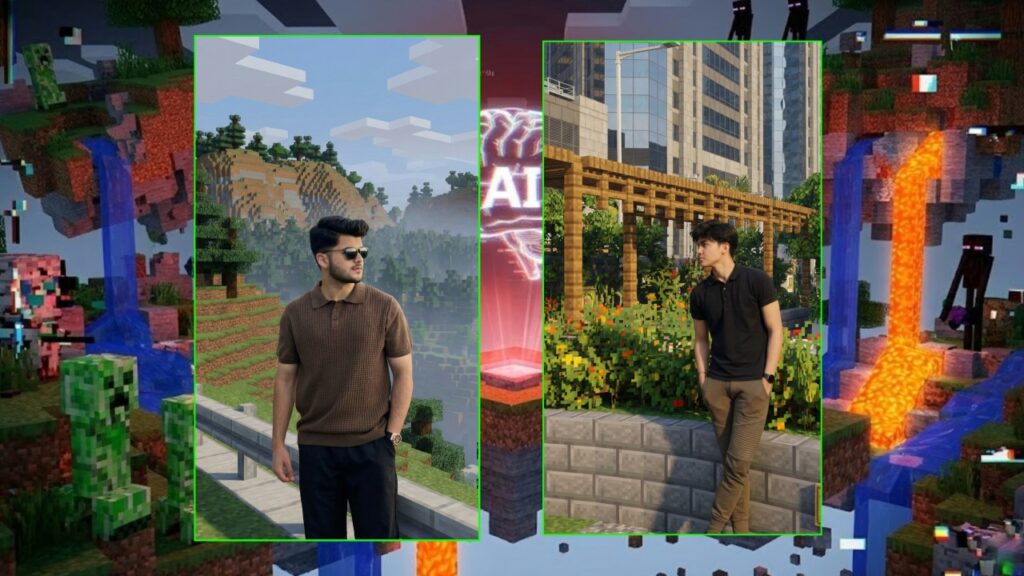

{ “task”: “image_transformation”, “style”: { “overall_aesthetic”: “High-quality cinematic Minecraft screenshot.”, “rendering_technique”: “Mixed-media: Photorealistic subject embedded in a voxel-based environment.” }, “subject_rules”: { “main_subject”: “The human person from the reference image must remain completely photorealistic and unchanged. No pixelation or block filters on their body, clothing, or face.”, “interacting_objects”: “CRITICAL: Any non-human object in direct physical contact with the subject e.g. or nearby pets dogs MUST be converted into Minecraft block models or mobs. A real dog becomes a Minecraft wolf.” }, “environment_rules”: { “background_analysis”: “Analyze the reference image background structure and recreate it entirely out of Minecraft voxel blocks.”, “elements”: “Trees, terrain, paths, and foliage must be cubic blocks with pixel art textures.”, “atmosphere”: “Replicate the foggy forest atmosphere using blocky volumetric fog layers appropriate for Minecraft.” }, “composition_and_lighting”: { “camera”: “Maintain the exact camera angle and framing from the reference photo.”, “lighting”: “Minecraft daylight with gentle haze and consistent block-based shadows.” } } enhance

The Takeaway

Gemini 3.0 is undoubtedly a remarkable achievement in AI development. Its ability to understand complex requirements and generate sophisticated code structures is genuinely impressive. But for game development specifically, it remains firmly in the experimental territory. It’s a tool that can produce stunning visuals and correct architecture while fundamentally failing at delivering playable games.

The next time you see a viral video of an AI generating a perfect game from one prompt, remember my broken Minecraft experiment. The magic is real—it’s just not finished yet.